Approximate Reciprocal Square Root

- Two Newton’s Methods

- Floating-Point as an Integer

- Finding a Magic Number

- Comparison with Known Magic Numbers

- Approximate Square Root

- Approximate Cube Root

- References

Two Newton’s Methods

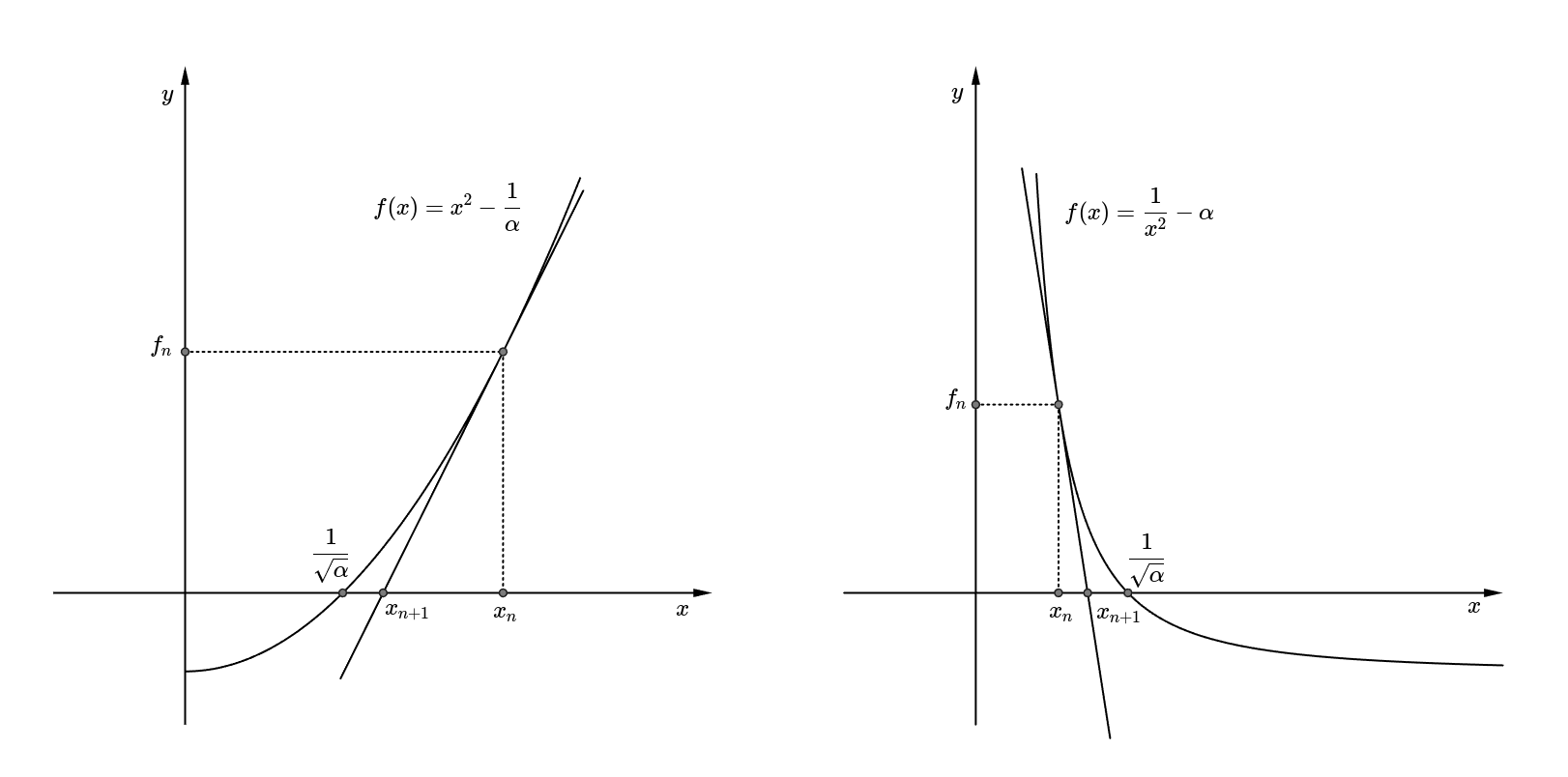

It seems natural to come up with Newton’s method to compute the reciprocal square root because it is a well-known technique for computing the square root. Although is the general approach, I thought could be another candidate for this problem. Applying Newton’s method to each case, the update formula can be obtained as follows. Note that in this note.

While I could guess why is not used since it requires the division operation for every update, it is not sure which function is much harder to set the initial guess at first glance. For now, assume that .

Floating-Point as an Integer

In the well-known fast approximate reciprocal square root algorithm, the surprising main idea, at least I think, is the integer representation of a floating-point. This itself is also a well-known trick, but it is quite surprising at the fact that this can be used to estimate the initial guess of Newton’s method. Since , let where is the exponent and is from the mantissa field such that according to this note. Note that is an integer such that , and is a real number such that . To the further discussion, the integer representation of can be written as

Now, to infer , at first can be reformed to look similar to as follows.

Here, since , a little adjustment can be applied to fall within the range , and finally can be approximated.

Note that since . As such, if shifting right one position and subtracting it from , this result might give a good initial guess for Newton’s method. The code from this may look like as follows. Note that 0x5f400000 is equal to .

float rsqrt(float a)

{

union { int xi; float x; };

x = a;

float a_half = 0.5f * a;

xi = 0x5f400000 - (xi >> 1); // approximation to get the initial guess

x = x * (1.5f - a_half * x * x); // one iteration of Newton's method

return x;

}

However, this code seems not that accurate, so deep analysis is required to find a better value than 0x5f400000. Even though more iterations of Newton’s method may give a better result, it is much more efficient to get a better initial guess. More specifically, magic - (xi >> 1) should be well approximated to for a magic number magic.

Finding a Magic Number

There are a few approaches to finding a magic number with an approximate logarithm or minimizing the relative error. But, I got an idea from the previous section while analyzing the mantissa field to set the rough initial guess, 0x5f400000. Let be this magic number and . Then, the main goal is to find the optimal such that

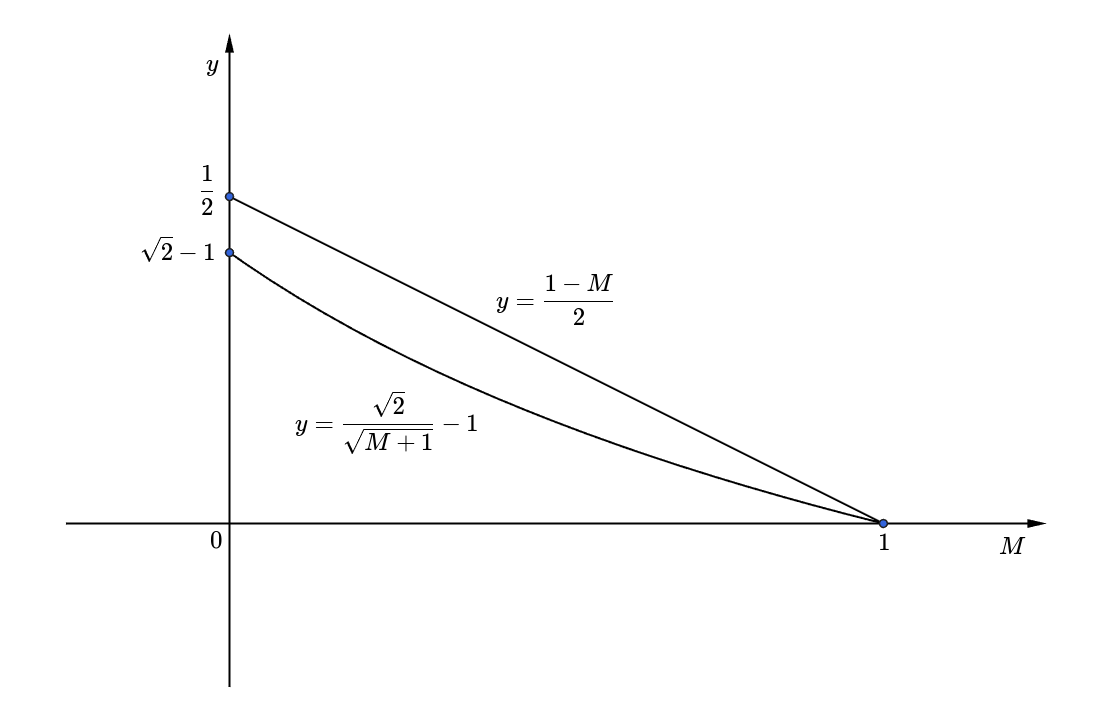

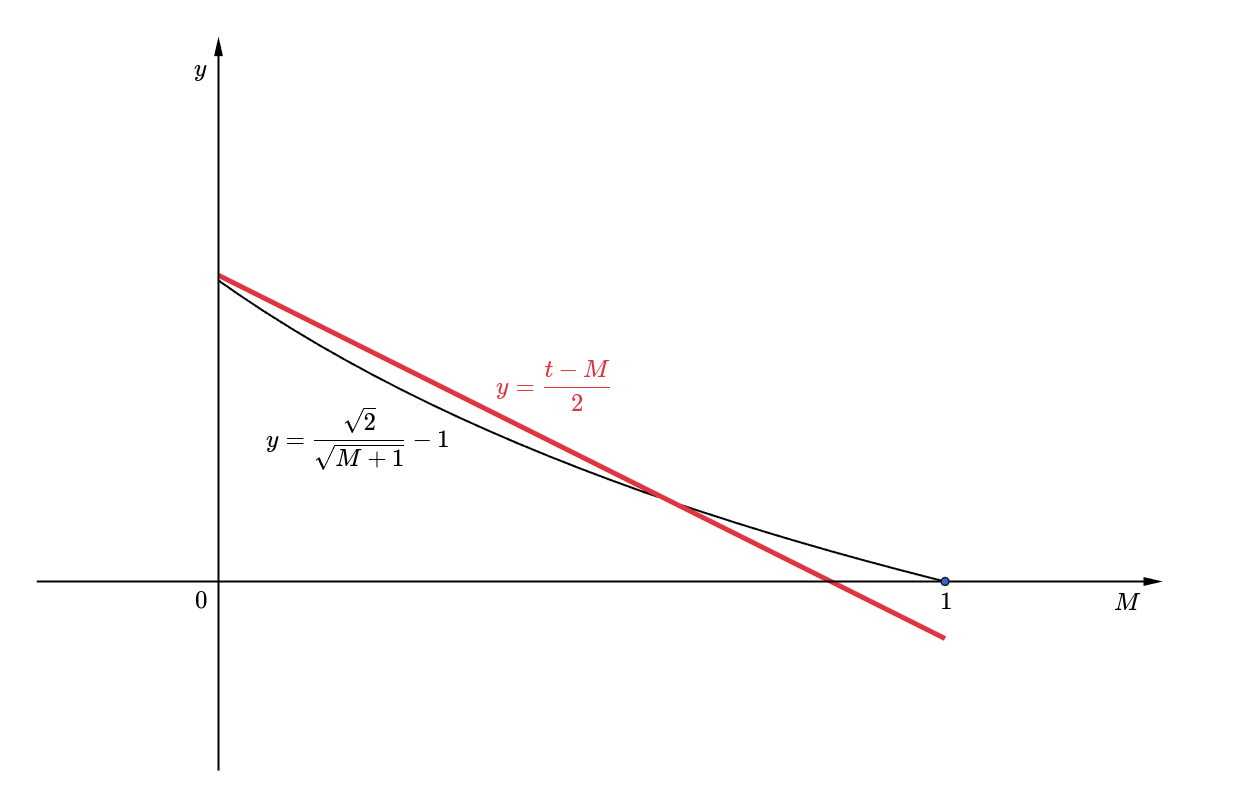

The important thing is that the form must be maintained while processing the optimization due to the (xi >> 1) part. In other words, since had been approximated with a line whose slope is in the previous section, the (xi >> 1) part was able to be possible. As such, this slope must be maintained and the line can move only up and down by a real number . This situation is as below.

Therefore, optimizing this line for is equal to finding the optimal minimizing the following cost function using Least squares.

Unfolding ,

Note that does not even have to be considered because it will be canceled out and is the right one minimizing since it is a convex function.

Accordingly, the found optimal magic number is , which is equal to 0x5f35f61a.

Comparison with Known Magic Numbers

There are already known magic numbers such as 0x5f375a86 and 0x5f37599e. Compared with these magic numbers, it is found that 0x5f35f61a does not perform that better than them after Newton’s steps. It is not that surprising, however, because as Lomont points out, his first optimal constant 0x5f37642f based on the analysis rather showed slightly less accuracy than 0x5f375a86 he found from the trial-and-error. 0x5f37599e is the known best magic number from [1] although no detailed explanation. Although 0x5f35f61a has the higher maximum relative error for all floating-point values, it has not been always the case and was not even rare while testing. So, it might be useful in some situations.

float rsqrt(float a)

{

union { int xi; float x; };

x = a;

float a_half = 0.5f * a;

//xi = 0x5f35f61a - (xi >> 1);

xi = 0x5f37599e - (xi >> 1);

x = x * (1.5f - a_half * x * x);

return x;

}

In modern C++, std::bit_cast is supported through C++20’s, and it can replace union statement.

# include <bit>

# include <limits>

# include <cstdint>

constexpr float rsqrt(float a) noexcept

{

static_assert( std::numeric_limits<float>::is_iec559 ); // (enable only on IEEE 754)

//const float x = std::bit_cast<float>(0x5f35f61a - (std::bit_cast<std::uint32_t>(a) >> 1));

const float x = std::bit_cast<float>(0x5f37599e - (std::bit_cast<std::uint32_t>(a) >> 1));

return x * (1.5f - a * 0.5f * x * x);

}

Furthermore, when applying Newton’s method for , it seems that the relative error is much smaller. However, as mentioned before, it requires division operation, so it might be the reason that it is not used since it is not suited for fast approximation. Besides, it is worth noting, according to Wikipedia, this algorithm is not generally the best choice for modern computers with subsequent hardware advancements, especially the x86 SSE instruction rsqrtss.

Approximate Square Root

Similarly, the approximate square root function can be derived.

The known optimal magic number is 0x1fbb67a8, the code is as below. The used for Newton’s method is the same as the one in this note.

float sqrt(float a)

{

union { int xi; float x; };

x = a;

xi = 0x1fbb67a8 + (xi >> 1);

x = 0.5f * (x + a / x);

return x;

}

Approximate Cube Root

Interestingly, the approximate cube root function can be derived as well. The main problem is now and since it is not the square root using only and , which work fine with the right shift operation. However, the division by 3 can be approximately implemented with the right shifts as well.

This can be evaluated with 7 instructions and slight improvement is also known as below.

float cbrt(float a)

{

union { int xi; float x; };

x = a;

xi = (xi >> 2) + (xi >> 4); // approximate divide by 3

xi += xi >> 4;

xi += xi >> 8;

xi += 0x2a5137a0;

x = 0.33333333f * (2.0f * x + a / (x * x));

return x;

}

But, this method is not as successful as in the case of reciprocal square root and square root.

References

[1] Henry S. Warren. 2012. Hacker’s Delight (2nd. ed.). Addison-Wesley Professional.