Environment Mapping

- Fish-Eye to Latitude-Longitude Image

- Creating Hemi-Sphere Environment

- Light Position Estimation

- References

- Code

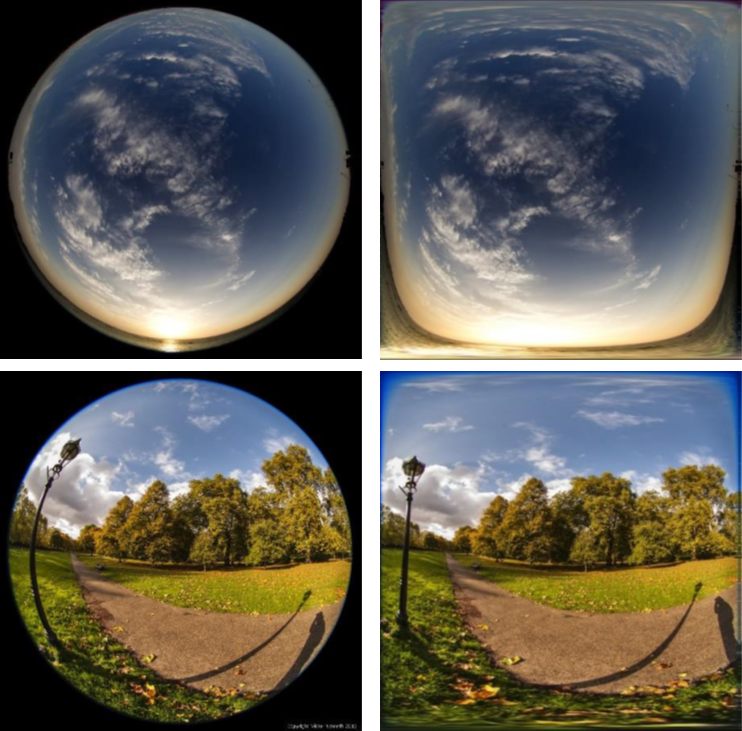

Fish-Eye to Latitude-Longitude Image

A fish-eye image is suitable for environment mapping since it covers a wider landscape. Although it includes much more information about the environment, it is inevitable that a distortion happens. That is why a fish-eye image should be mapped into hemi-sphere shape of environment, which means that it has to be converted to the latitude-longtitude image at first. It can be done with [1], [2] or something like these materials.

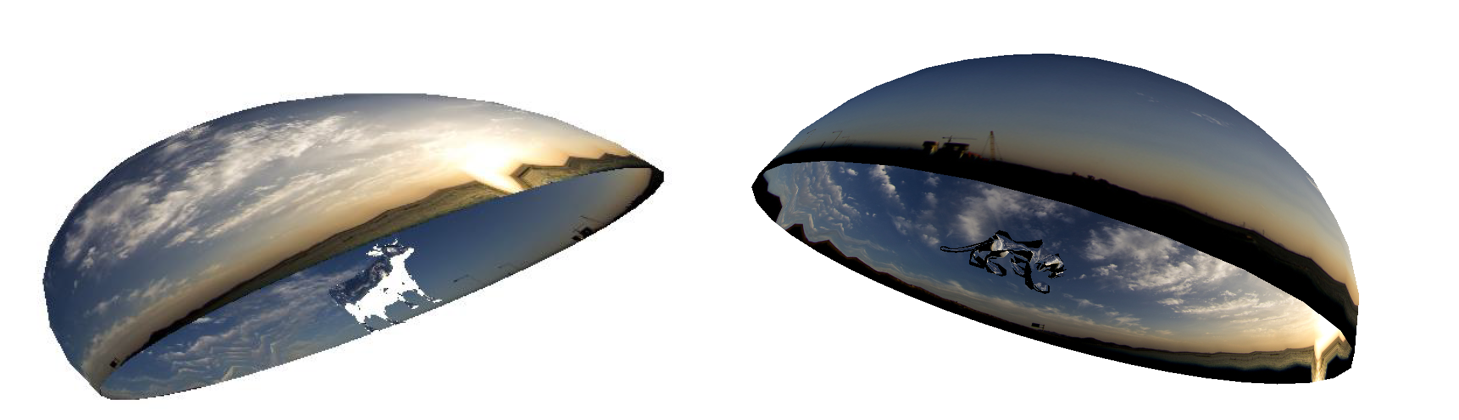

Creating Hemi-Sphere Environment

After latitude-longitude conversion, environment can be mapped to a hemi-sphere model as above. This can be easily done with OpenGL shaders.

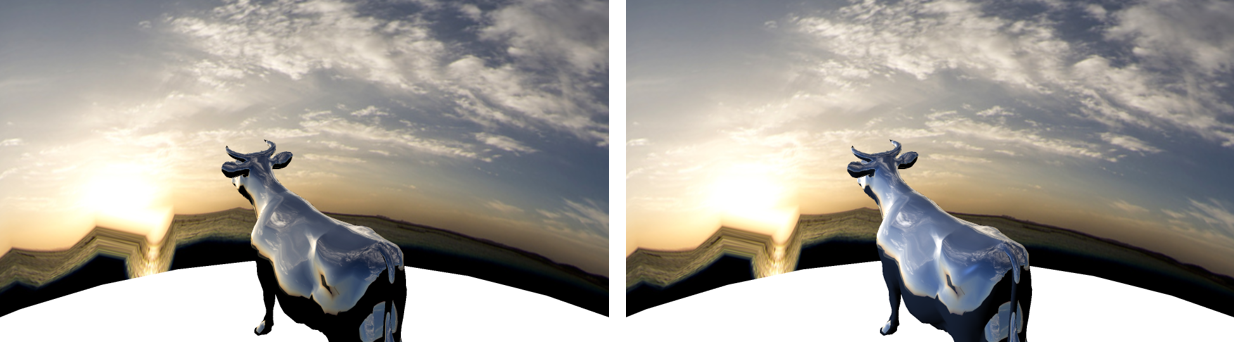

Light Position Estimation

For more realistic environment mapping, shading should be applied to each object in the environment which is here hemi-sphere. It requires light position, so estimating light position in latitude-longitude image is needed. I implemented Median-Cut[3] and Variance-Cut[4] algorithms, and chose the most probable light positions. You can tell the difference between when light is applied or not as above, and of course, the right image includes the light. The cow object is much more shiny when the light is considered.

References

[1] Converting a fisheye image into a panoramic, spherical or perspective projection

[2] Erik Reinhard, Greg Ward, Sumanta Pattanaik, and Paul Debevec, High Dynamic Range Imaging; Acquisition, Display, and Image-Based Lighting (Second Edition)

[3] A median cut algorithm for light probe sampling

[4] Improved variance cut algorithm for light source estimation in augmented reality

Code

https://github.com/emoy-kim/EnvironmentMappingForFishEyeLens